SQL 101 - Working with DUPLICATES

How often have you got duplicate rows in the results of your SQL queries? My guess is that since you’re here with me it’s probably more than 1 time. Usually duplicate lines aren’t something you want, but is this always the case? How to check if the output data contains duplicate entries and how to remove them if necessary?

You will find the answers to these questions in this post.

TL;DR

Duplicates in the data are not unusual.

First of all, answer the question whether you are “expecting” them or are they due to an obvious error in the data.

DISTINCT or the ROW_NUMBER () OVER () + WHERE combination will most often help you to remove them.

What is a duplicate?

Perhaps a non-book definition, but let’s try: “identical in value to a row in the source table (or in the result dataset) to another row in the same table or result.”

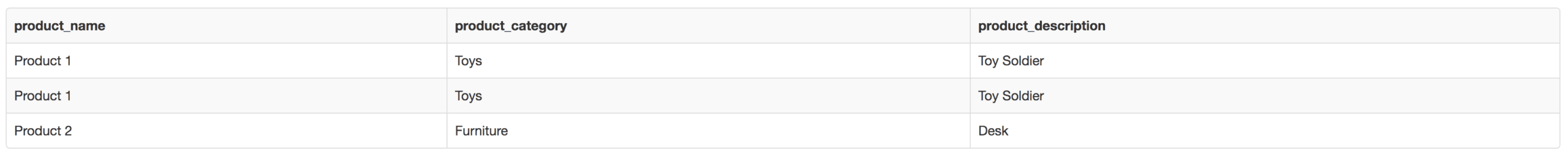

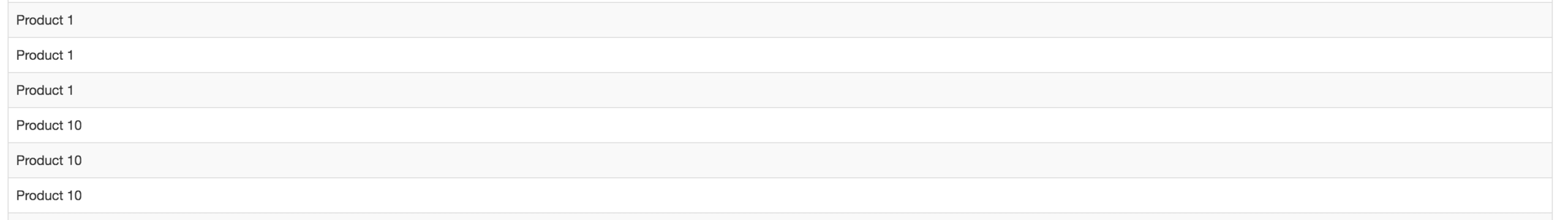

Example

In the above context, Product 1 is a duplicate in the result set - all columns have the same values.

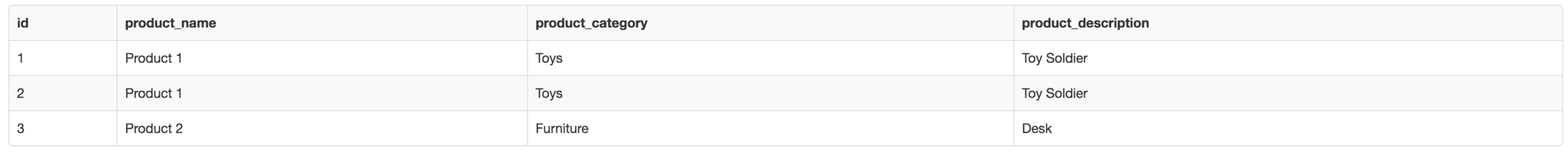

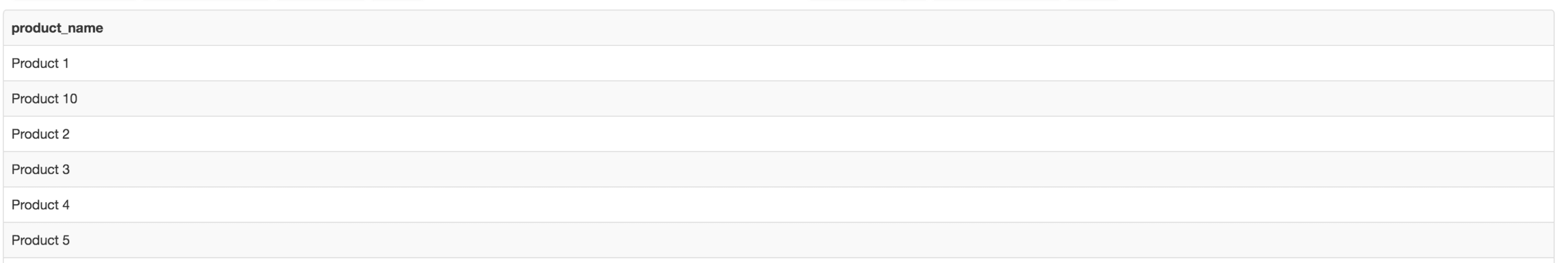

And what about this case:

Above, we see a situation where the lines for Product 1 differ only in identifier (autoincrement), or is it a duplicate?

As defined above, no, but we could safely assume that a mistake has been made with the database model.

Tables have no constraints, and there is no other field (column) that defines why the same row was inserted. Contrary to appearances, such situations are not uncommon, and we, as data workers, have to deal with such situations.

Example in SQLFiddle: http://sqlfiddle.com/#!17/c4b89/2

Why duplicates happen?

What could be the causes of duplicates:

- Incorrect database model - no constraints as to the values that we can add (e.g. Primary Keys / Unique Keys)

- No propagation of changes to dependent objects - if the table in the source system has been extended with e.g. the aktwyne_od flag (for history tracking), and other systems using the table have not been updated with this change, it is very likely that duplicates will appear

- Incorrect construction of table joining conditions

- Human errors - e.g. manual insertion of records (especially to a table without limits - see incorrect database model)

- Lack of understanding of the data source - e.g. retrieving only part of the columns from the source table, in which you will not be able to clearly see the difference between the records (e.g. omitting the timestamp, active flag, etc.)

How to find duplicates?

To answer this question, first, it’s good to know if you can specify a column or group of columns in your result set or table to be unique?

Yes, I have such a column (group of columns). Suppose a column is named product_name.

SELECT count(f.product_name)

FROM products f;

The above returns the total number of records with duplicates.

SELECT count(distinct f.product_name)

FROM products f;

The above will return the total number of records without duplicates. No, all columns are unique. In this case, we can either create a unique string for all columns (hash) or check by all columns.

Without HASH

SELECT count(f.*)

FROM products f;

The above returns total number of records with duplicates. Result is 100.

SELECT count(distinct f.*)

FROM products f;

The above returns total number of records without duplicates. Result is 61.

With HASH

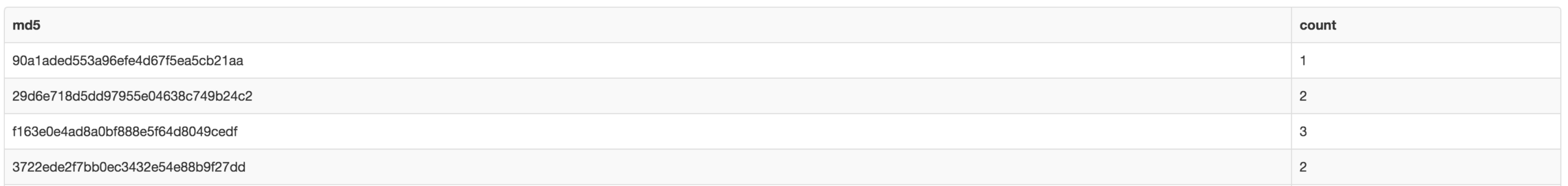

SELECT md5(f.*::text), count(*)

FROM products f

GROUP BY md5(f.*::text);

md5 - is a function that generates a string based on columns or input column.

In the above case, we get unique strings with a total number of occurrences.

“Well cool, but apart from the fact that they are, I want to know which rows they are”.

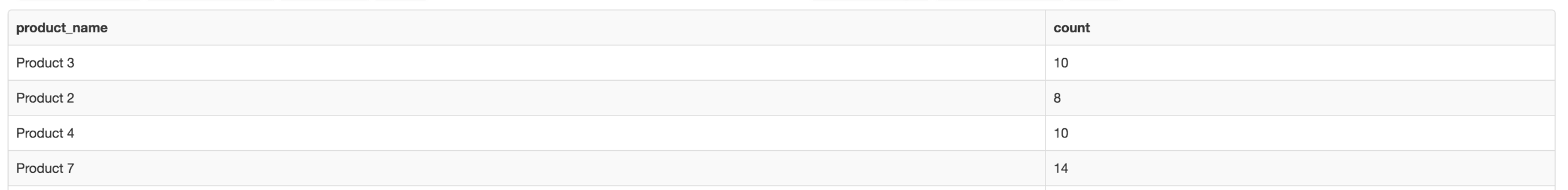

COUNT + GROUP BY + HAVING (for product_name)

SELECT product_name, count(*)

FROM products

GROUP BY product_name

HAVING count(*) > 1;

- group by - will create groups of lines by breaking by product_name

- having count (*)> 1 - will filter out products that appear only once from the result

As a result, you will get a list of duplicates.

SELECT product_name

FROM (

SELECT product_name, count(*) over(partition by product_name) rc

FROM products

) sq

WHERE rc > 1;

- count (*) over (partition by product_name) - for each row count the number of rows by grouping them by product_name

- where rc> 1 - show those lines where the number of occurrences is greater than 1 (duplicates)

ROW_NUMBER + WHERE (sub-select - for product_name)

SELECT product_name

FROM (

SELECT product_name, row_number() over(partition by product_name) rc

FROM products

) sq

WHERE rc=2;

- row_number () over (partition by product_name) - numerates each row relative to the group created on the basis of the product_name column

- WHERE rc = 2 - display only those lines where the line number is 2 (this indicates duplicates, even if there are more than 2 duplicates for 1 product_name, we only want to see 1 line for later deletion)

How to remove duplicates

It depends on whether we want to remove them completely from the source table or only from the result set.

If removed from the result set, you can use one of the examples above, e.g. row_number.

SELECT product_name

FROM (

SELECT product_name, row_number() over(partition by product_name) rc

FROM products

) sq

WHERE rc=1;

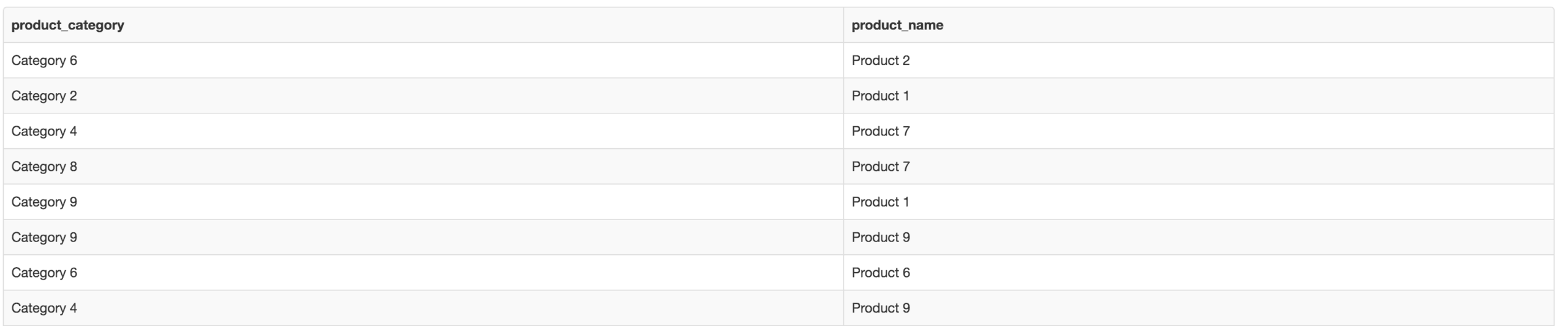

or use the option that SQL gives you straight out of the box - DISTINCT.

DISTINCT removes duplicates from the result set by examining all columns of the row.

SELECT DISTINCT product_category,product_name

FROM products;

In case of complete removal, use the DELETE + WHERE syntax, where in where you will add one of the subqueries as above.

All entries with a duplicate key will be deleted.

What if we want to delete only duplicates (leave 1 record). Then it would be good to add a column, e.g. row_number (as in the example above) and remove the records where row_number is> 1.

And what if we would not remove duplicates?

Sometimes duplicates are something “expected” (to a greater or lesser extent) and perhaps instead of throwing them out of the output data, we will want to show them, for example, to decision makers, to pinpoint a problem with the data. In such a situation, it is worth going back to the data model itself and trying to determine the cause of duplicates.

Example: In our case, it could be the product_description column. That is, a different description for different products, added to the source product table, but not included in the application changes. JOIN before adding this column, it could return the data correctly, after adding it we will get duplicates. In such a situation, it is worth considering grouping lines with different product_description to 1 line, in which subsequent descriptions will be list / text elements, separated by a separator.

SELECT product_name

, string_agg(COALESCE(product_description,''), ' / ')

FROM products

GROUP BY product_name;

- string_agg - creates a uniform text from elements, separated in our case by “/”

- coalesce - allows you to change the NULL value in a column to a string declared by the user (empty text here); Used here to avoid NULL values in the result set when concatenating text where one of the elements is just NULL.

All examples are available in SQL Fiddle: http://sqlfiddle.com/#!17/d66ec6/22

Summary

Good understanding of the systems we work on is the key to building correct queries.

Having knowledge about the data model, data storage and table constraints, can easily eliminate duplicates from the result set.

Sometimes, however, we need help and it is worth being aware that your arsenal to fight duplicates does not have to end with DISTINCT.

Want to be up to date with new posts and more? Subscribe to Data Craze Weekly newsletter.